Quantity Has a Quality All Its Own

A short summary of and reactions to Erik Hoel on how science got its scale

Intellectual Crossroads

The coolest theory I learned in 2023 is an attempt to explain the weird, nagging gap in physics between the standard model and general relativity. Even stranger, it’s nestled in a discussion of consciousness and neuroscience, bouncing between information theory and philosophy. Here is why I cannot stop thinking about Erik Hoel’s The World Behind the World and specifically Chapter 10, How Science Got Its Scale. I would encourage you to read the book, as Hoel is an expert in his field and a talented writer, such that the material is engaging and tractable. Indeed, I claim only curiosity about these areas, not expertise, so assume any mistakes in this summary are my own. I want to give you just a taste of the issues rattling around in my head and how his framing of causal emergence has given me some new questions to ask.

Consistency is the Hobgoblin of Little Universes

The basic problem philosophically is, if the world can always be broken down into smaller units that are acting mechanically, how can free will exist? If you are just atoms and atoms are just quarks and at some point after quarks it stops and we understand all the math and it’s math all the way down, are you nothing more than the equations radiating from whatever bottom thing bouncing along? Does this go on forever? Recalling the Ship of Theseus, what does that say about who you are when those things change?

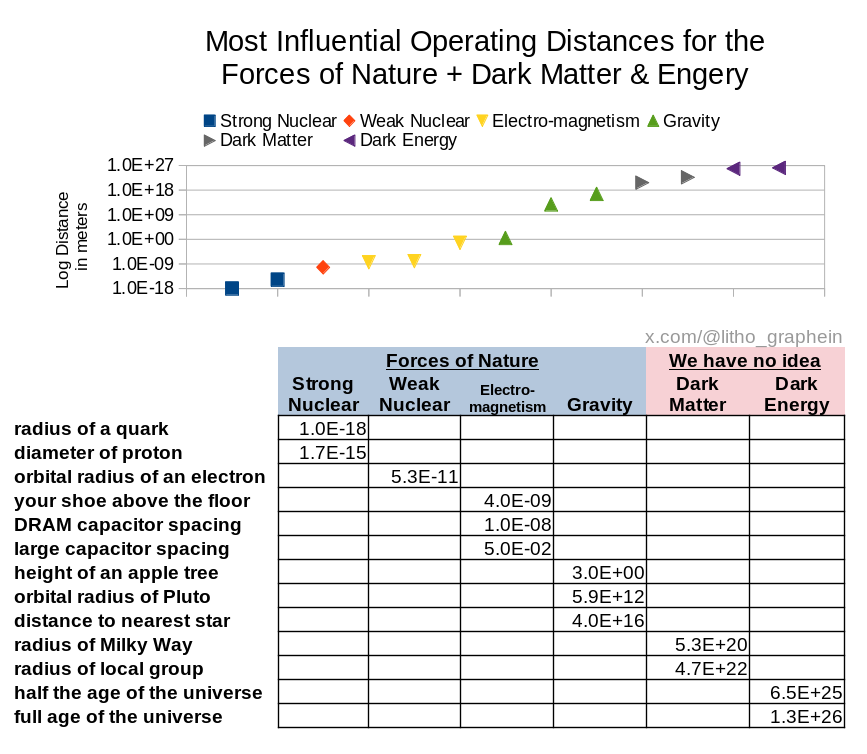

The basic problem scientifically is that we’ve done a pretty good job of mapping the universe around us and, uhh, the standard model based on electromagnetism, weak, and strong nuclear forces doesn’t play nice with relativity. Through the lens of an engineer, they are both useful in understanding the world, which is to say they accurately predict interactions some of the time but not all of the time, so you best know where you are standing. The best we can say is that each is incomplete, yet all of our tests keep reinforcing that they are each accurate and (as best I can tell) self-consistent. Interestingly, the scale where each force dominates the others maps well to a log diagram:

In the replies to the source tweet, the creator of that chart highlights that electro-magnetism and gravity are the only ones that overlap, which is notably where the standard model and relativity break down, as though it’s some kind of inflection point.

Scale is All You Need

Hoel offers a potential insight into the log forces and this transition point: causal emergence. Before you can appreciate causal emergence, you need to understand some context. It starts by defining the microscale, whatever that thing is at the bottom of the universe. Each level above it is a macroscale, a “dimension reduction” which is “when some data is left out of a description, like a coarse-grain or average or grouping”. If all the world can be explained at the microscale, the macroscale isn’t adding anything new, an argument known as the exclusion argument.

While one issue with the exclusion argument is that elements of the microscale can change while the macroscale is stable, the more troublesome conclusion of the exclusion argument is that “[moving] down the spatiotemporal ladder should always give a better understanding of what causes what, never a worse one”. That is where causal emergence strikes, claiming that “[causal] emergence occurs when macroscales have more causal influence than their underlying microscales over the exact same events”. Hoel offers a few straightforward proofs of how this works in practice and evidence from many domains, which I am omitting because you should read the book. He puts a stake in the ground that the phenomenon so widespread it is nearly unavoidable.

How is it possible that causal emergence happens in every macroscale? It is simply error correction, where a macroscale increases the determinism (how much information do we know about the inputs based on the state of the outputs) and/or decreases the degeneracy (how often different inputs lead to the same output). In his own words:

Philosophers and scientists will sometimes distinguish between what they call “weak” and “strong” emergence, with the weak kind being uncontroversial but also unexciting, and the strong kind being so controversial that only a slim minority believe it exists. Weak emergence just means that macroscales are difficult to predict or understand in practice, but are reducible to the microscale in theory. Strong emergence means that new irreducible properties are added up at the macroscale, which looks a lot like it requires new physical laws for just the macroscale. Yet these present us with bad choices, as weak emergence is relatively uninteresting, whereas strong emergence is too interesting! Causal emergence offers a middle road, being neither weak nor strong emergence. It holds that the elements and states of macroscales are reducible to underlying microscales without loss, but that the causation of the macroscale is not. At the same time, this “extra” causation is not unexplainable or mysterious. It’s just error correction.

He extends the argument through to natural scales of description:

a natural scale of description is the point where the most minimal of dimension reductions leads to the maximum gain in terms of the degree of causal influence parts of the model have on one another

such that all domains of science are “error-correcting encodings of physics”.

It’s AAABBBCCC All The Way Up

Hoel journeys back into the philosophical, concluding with a scientific case for free will that is well worth your time. However, it is this theory that has been trapped in my head. I hear it when we look at reconciling the standard model and relativity. Is relativity the microscale without error correction? Perhaps its an orthogonal (competitive?) encoding pattern for information in the universe? I hear it when progress studies asks: are ideas getting harder to find? Have we simply explained the knowable universe of things at this scale? Is it inherently more difficult to derive meaningful information at smaller scales because there’s inherently less error correction? I hear it when I see unsupervised machine learning: are these algorithms detailing macroscales we’ve never noticed? Maybe could never see?

Finally, revealing my own engineering inclinations, is error correction in this context a useful framing perspective or is there a mechanism to exploit? For example, are there lessons embedded in error correcting macroscales we understand about how to stabilize particles like exotic, unstable elements or even the fleetingly rare Higgs boson?

Hopefully you’ve been captured by this theory too, and if you see it in the world around you, let me know where in a comment.

If you want to hear more from Erik, I encourage you to subscribe to his Substack, the always fascinating The Intrinsic Perspective.