Technology Development and the Collapse of IDM 2.0

Silicon Statesmanship #5: Explaining TD, TD culture, and how it hobbles IDMs

Previously: Silicon Statesmanship 1, 2, 3, 4

Pat Gelsinger “retired” this week from Intel, reportedly after losing the board’s confidence. His strategy of IDM 2.0, in which Intel sought to manufacture products for itself (known as Intel Products) and for others (known as Intel Foundry), is all but dead. Commentators have wondered if Gelsinger could have done anything different in his three short years. I believe he could have, but understanding why requires some understanding of how semiconductor manufacturers are organized.

Technology development is a core function of semiconductor manufacturing. In some companies, it is literally called technology development, though it often is specified as process technology development or logic technology development. These all refer to the same job function, and unless otherwise specified, assume I am talking about whomever is doing that function inside the organization. Moore’s Law is literally built by these teams. I have not seen a good explainer of what they do and why, so I wanted to provide that as context for how they operate inside big companies.

I strongly suspect Gelsinger failed to radically change Intel’s fortunes due to not radically changing the culture of Intel’s TD group. In fact, they may not even have the right skills inside the company to serve external customers. There is lots of evidence that he tried to change this, notably through the failed acquisition of Tower Semiconductors. I acknowledge it is possible he did fix it, given how fast Intel is churning out new nodes, but in that case it did not happen fast enough for the board’s liking, which means the board should be fired.

So we’ll start at the beginning to understand what TD is given and what they are expected to produce, how the TD team interacts with other teams in an IDM model and a foundry model, and then analyze why TD’s culture explains IDM 2.0’s struggles.

Inside Technology Development: The Heartbeat of Moore’s Law

Nodes, Transistors, and the Science of Scaling

Moore's Law is the observation that the number of transistors on an integrated circuit will double every two years with minimal rise in cost. Modern nodes take much longer and have actually increased in cost per transistor, so Moore’s Law has weakened substantially but still advances. Derived from a paper written by Gordon Moore, a key technologist in the early history of semiconductors and a founder of Intel, Moore’s observation was based on the trend of what successful semiconductor companies were delivering at the time. That trend was consistent with his own experience: Intel’s strategic planning called for Intel’s manufacturing team to deliver roughly double the number of transistors every two years at the same cost to keep their products ahead of the competition. It is what his team had to create, an organizational function known as technology development (TD):

Marketing Inputs (Gordon Moore era): More transistors, ~every 2 years, same cost

Bringing chips to market on a new process technology requires coordination between three functions: product marketing, chip designers, and technology development. Product marketing cares about the product that gets built: what workloads get targeted, how well it performs, when it is available in volume, and the value and cost of the chip. Chip designers primarily work in the realm of circuit schematics, essentially logic blueprints for how the chip should function. They know optimal circuitry for balancing power and performance, but they need to know what the process looks like. A rough analogy is that they are architects designing houses out of materials that the technology development team creates. At the leading edge, that process is still in development, so chip designers rely on the teams developing that technology to coordinate with them on the performance, process assumptions, and design rules for that new process.

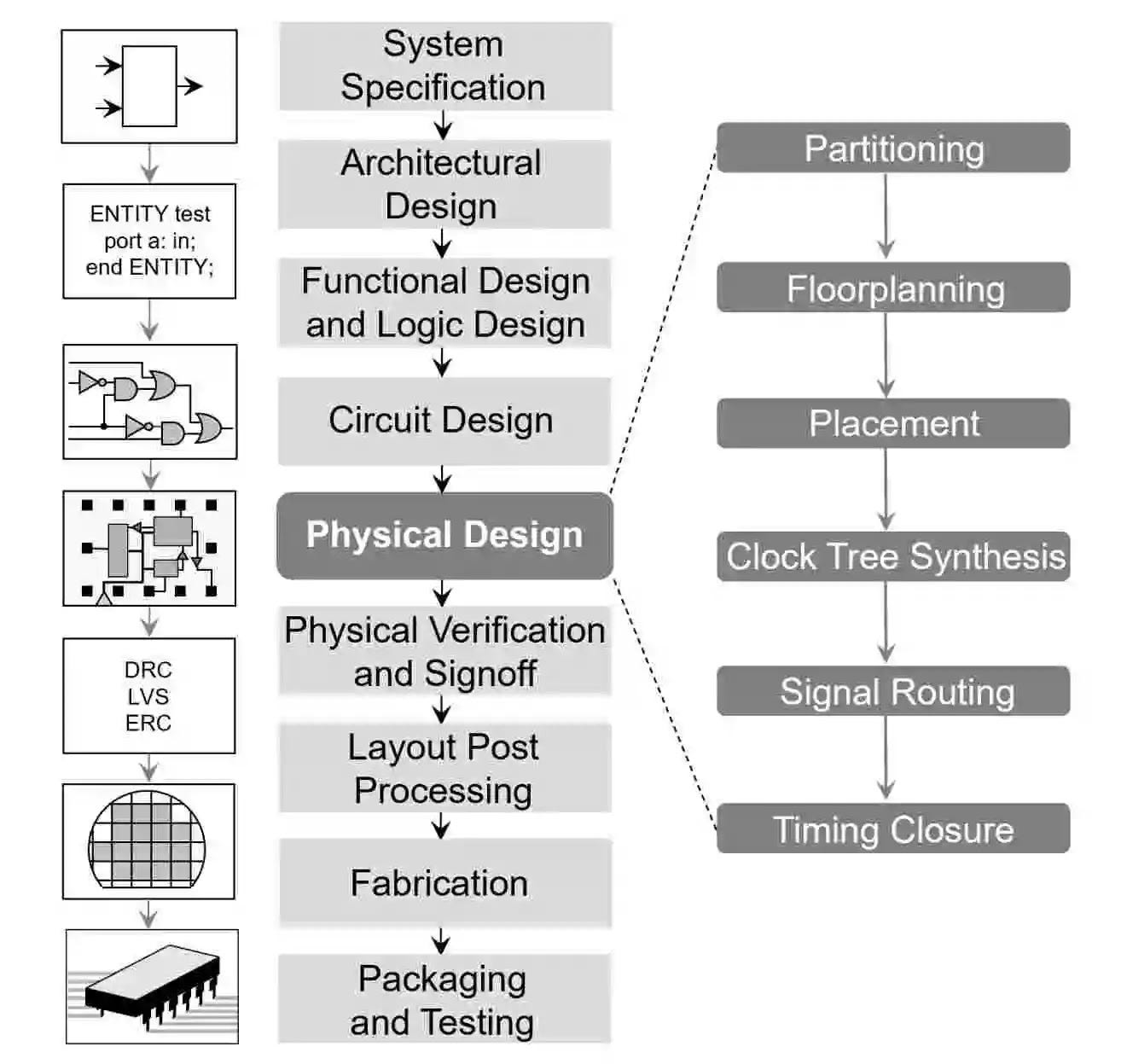

Technology development is best thought of as the front-end for silicon manufacturing. TD fundamentally must expose silicon manufacturing as software. Chip designers are the customers for TD. Chip designers specify the system spec, the architecture, the functional design of the chip, how the logic should work, and how the circuits should be connected. TD must tell them how to actually manufacture those circuits, or rather, the optimal way to place those circuits to get the best results from that process. In some ways, TD is working in reverse from the chip designer, trying to synthesize billions of dollars and decades of research of semiconductors into something that is performant, reliable, and cost-effective for chip designers.

Ann Kelleher, Intel’s outgoing head of Technology Development, said, “I view the process and technology, as the heart at Intel, because if we do our job really well, we deliver not just the present, but we’re also making the future. I tell my team that we need to deliver really well the present, but we're also making the future.” That is, TD at any semi company, including Intel, is responsible for Moore’s Law. Every silicon manufacturer must have a group responsible for TD, even if not every group calls it TD like Intel does.

TD’s core deliverable is the node. A node in semiconductor manufacturing is a collection of features that a production line can create on an integrated circuit. Each node is fundamentally transistors (switches) and interconnects (wires connecting the switches). Each node is characterized by the power it consumes, its performance i.e. how fast the switches can switch, the area or the physical size of common features, and cost. The node is the ultimate deliverable, but to access it, chip designers need it codified in software. The node will ultimately need to be packaged in a process design kit (PDK), which expresses in code the design rules for turning pure logic into transistor schematics. A PDK is a combination of process assumptions and the design rules for a given node. Process assumptions are all the physical parameters like wire resistance, wire width, line width roughness and many electrical and lithographic parameters. Design rules are established on top of process assumptions. In the VLSI design flow shown above, the PDK is the software tool that translates the desired circuit design into a physical design. The physical design is only appropriate for that specific node.

TD Output: Node(Power, performance, area, cost)

Moore’s Law fundamentally means that a new node is not the best one after about 2 years, so many aspects of each node must be updated with each new generation. Here, I’m going to drop an assertion that most people either miss or don’t fully appreciate about Moore’s Law: smaller transistors are better because they use less power to switch, can usually switch faster, and take up less space. Fully explaining this assertion would be another essay, so feel free to ask for nuance in the comments (there’s lots of it). Taking up less space means both that you can fit more in the same area and that you can create more transistors with the same number of lithography steps. Drawing smaller transistors starts with being able to draw smaller features i.e. being able to precisely hit materials with light at increasingly smaller wavelengths. Creating smaller features is primarily the field of lithography, though the entire process has been optimized. Other critical steps include deposition (placing material in layers as thin as a single atom in a precise place) and etch (removing those layers in precise locations), which allows for the bulk construction of these fine features over time. Together, these shaped the manufacturing inputs to technology development:

Manufacturing Inputs: Tools (e.g. lithography, etch, deposition), materials research

The Shape of Progress: Transistors and Trade-Offs

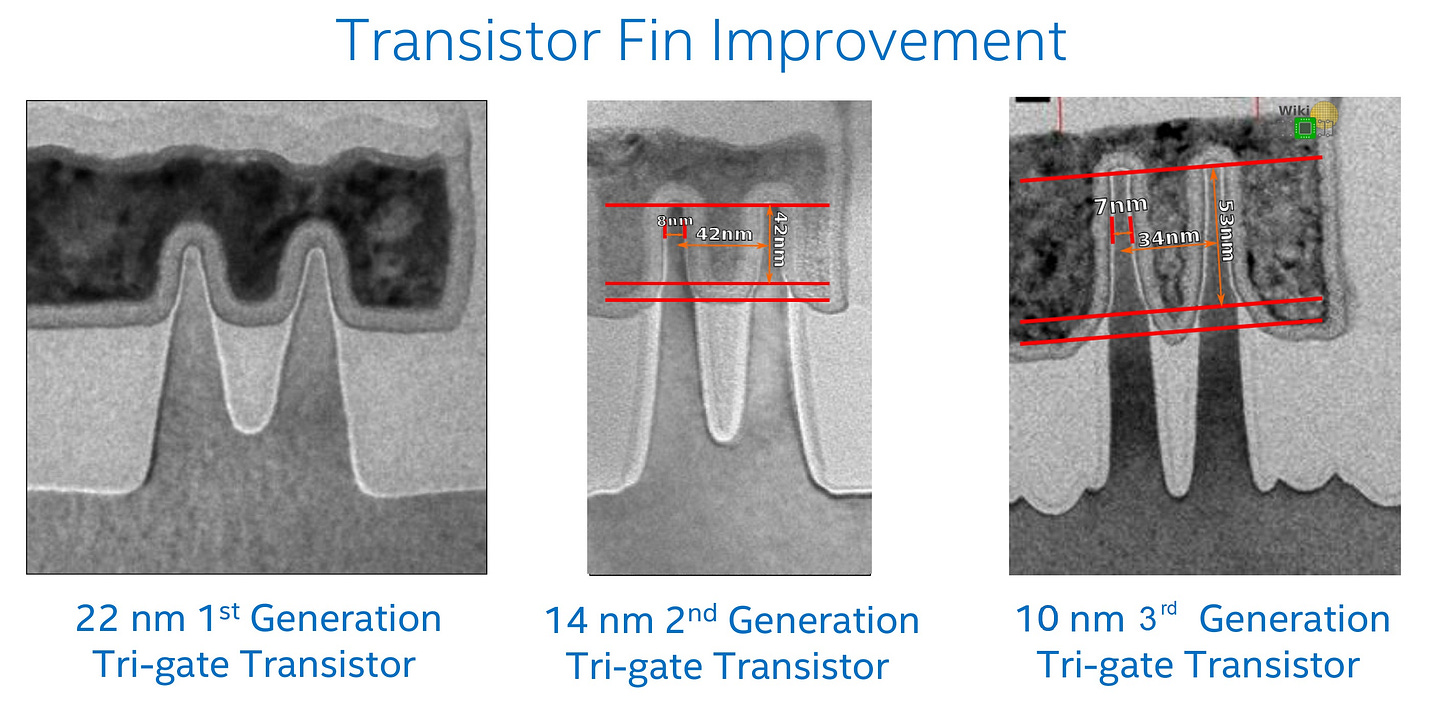

Drawing smaller transistors was how most of Moore’s Law worked until about 2005, a process known as Dennard scaling which advanced until it hit the thermal limit for silicon. We still draw smaller transistors, but the shape of the transistor became much more important to getting performance improvements. Those shapes are their own field of research that must be curated and edited to be suitable for a high-volume manufacturer. Until 28 nm, most transistors were planar. FinFETs were necessary to start scaling performance with Intel’s 22 nm. Now, with process nodes <5 nm, a new design is necessary, called Gate-All-Around FET or GAA. For reference, a single atom is 0.1-0.5 nm, so at ~5 nm this entire process is taking place on the scale of ~10 atoms. Electrons start behaving less predictably at this scale, and new designs are meant to make them act more predictably through careful selection of the shape and material.

The shapes can be biased in certain ways. Changing the specific dimensions of source, gate, and drain optimizes transistors for different applications. A high-power device may be willing to consume more power if it can switch faster. A low-power device may prefer the opposite. If a node wants to offer both, it needs to determine which transistor shapes it can support in a catalog. The question for each shape is if “the juice is worth the squeeze”. New shapes introduce chances for errors. TD has an incentive to push designers towards what they know works best, though designers want to push TD towards what they believe will give them the best performance (and make TD figure out how to do this with high quality). How these conflicts are resolved is a key to understanding why IDMs make for awkward foundry partners.

Research Inputs: Transistor shapes, shape optimizations

The Wiring Problem: When Chips Are Cities

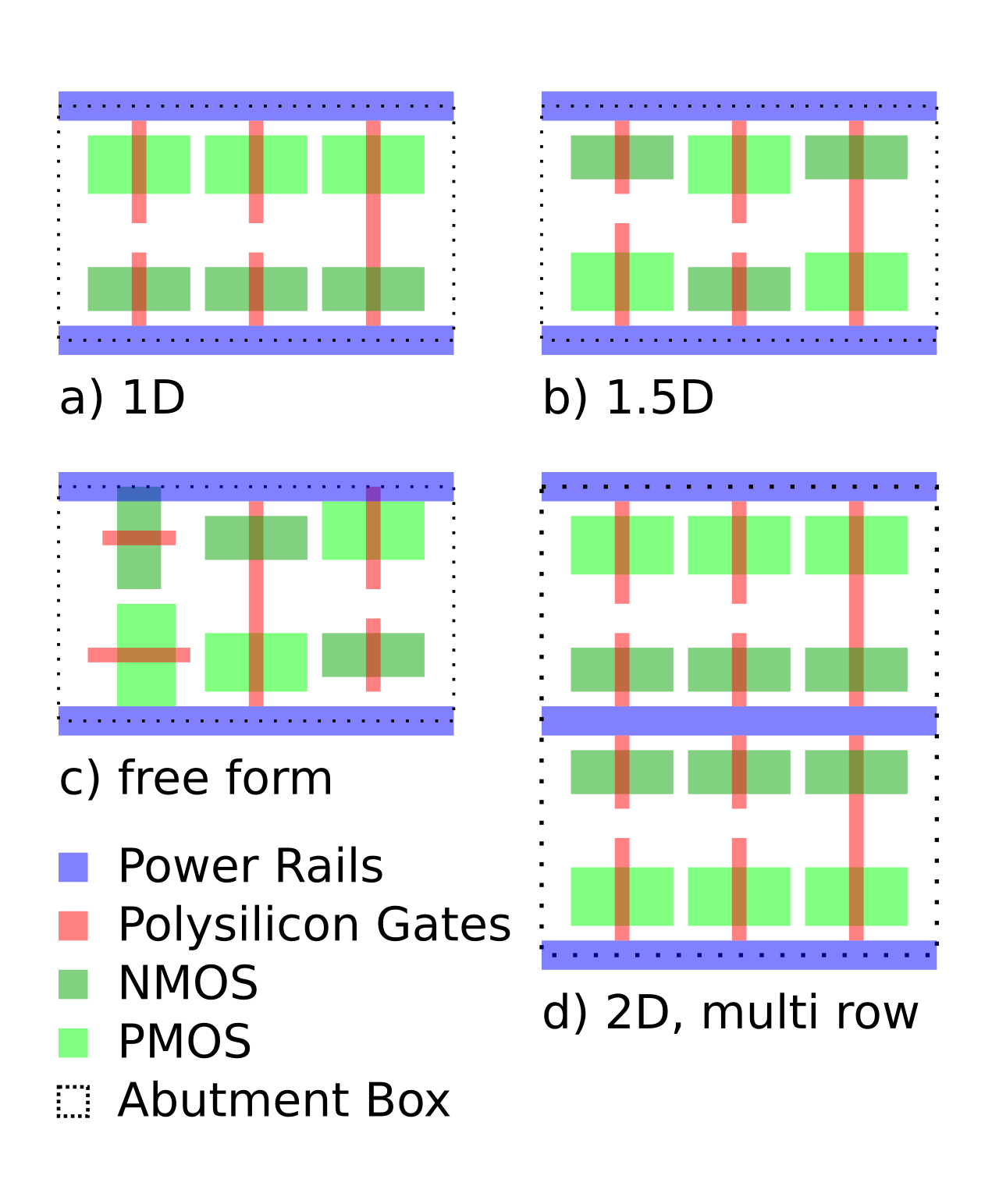

With an understanding of the minimum feature size the tooling can support and an idea of which shapes deliver optimal performance, TD can start assembling these transistors into cells. Standard cells are collections of transistors and interconnects that enable basic features. Defining standard cells and how they can route together is a core way to define a node in software. The cell structure is often defined by the cell height, minimum transistor dimensions, minimum spacing between transistors, and wire placement. Each of these decisions constrains chip designers in different ways, as they try to optimize the chip-level performance. In modern chips, signals must travel between different parts of the circuit within a clock cycle. The longer the wires (or interconnects), the more time it takes for the signal to propagate due to RC delays (resistance and capacitance effects). If a signal takes too long, it can cause timing violations, leading to errors in computation. Think of it as traffic on a highway. The farther the car has to go, the longer it takes, especially if the road gets congested. Long wires consume more power because they require more energy to drive signals across the resistance and capacitance of the wire. This is critical in low-power design (e.g. mobile devices) where battery life is a constraint. Pushing a heavy object farther requires more energy than moving it a short distance. Efficient chip design tries to minimize wire lengths to reduce delays and power consumption. However, as chips become more complex (e.g., in System on Chips (SoCs)), wire reach determines how easily different functional blocks can communicate. This leads to trade-offs between chip area, performance, and manufacturability.

Imagine designing a city. If essential services (like hospitals or fire stations) are too far from homes, it becomes inefficient and potentially dangerous. At smaller process nodes (e.g., 5nm or below), wire reach becomes even more critical because wires don't scale in the same way as transistors. They occupy more area, contribute disproportionately to delays, and impact overall performance. If your house shrinks, but the roads don’t, it’s harder to fit everything efficiently.

Remember that nodes usually improve on previous nodes by drawing smaller features. But sometimes not all of the features can be reduced by the same amount, as it can change performance or manufacturability. As a result, sometimes features simply are removed. In SpaceX parlance, the best part is no part. However, there are tradeoffs. Fewer transistors means each transistor must be more performant to achieve equivalent performance. Alternatively, the same number of transistors would achieve higher performance.

The changes in standard cells are why any circuits designed in previous nodes will not translate to new nodes, not without porting. Porting is the process of re-mapping desired functions into the design rules for that new process node. The process carries significant development costs, as the design needs to be re-validated in silicon and any issues need troubleshooting. In the modern semiconductor manufacturing world, electronic design automation vendors (EDA) like Cadence, Synopsys, and Siemens own libraries of standard cells. The EDA vendors partner with the TD team to ensure standard cells that most designers need are available on their platform.

The PDK is nearly complete. With the standard cell libraries, foundries provide other information necessary to appropriately place cell libraries, including the design rules on layout, spacing, and layer usage, physical layouts for placement and routing, abstracted layout information for automated routing, timing, power, and noise models, detailed circuit descriptions for analog simulation, and device models to simulate the final chip’s performance and verify it is performing as expected.

To summarize, TD takes as inputs market requirements, manufacturing capabilities, and novel research ideas to create a node, characterized in software as a PDK. With the PDK, chip designers can effectively plan their chips.

The Culture of TD

TD has arguably the most demanding job in semiconductor manufacturing. TD has to model and predict how quantum effects at the sub-nanometer level will translate into making AI chips attain even higher model flops utilization. They must develop features on the scale of 10s of atoms that can be manufactured billions of times. TD often has to call its shot 3-7 years before a transistor ever gets produced, committing the company to fabrication processes nearing the $40B mark. If they get it right, the chip designers have access to the best, highest-performing technology before anyone else. The chip designers will pay huge prices for the wafers because they too can dominate their chip markets by being better, faster, and cheaper. If TD screws up, the chips become too expensive or too late. In the worst case, the $40B fab can literally be too expensive to turn on because of the cost of running the thousands of tools inside. Generally, when you hear of a process node being delayed, it is because something has gone wrong in TD’s purview.

More than anyone, TD carries the responsibility for Moore’s Law. In a word, TD teams are cracked. They know it too. To get a sense of their self-esteem, consider the case of Sam Altman, CEO of OpenAI with previous experience guiding the YCombinator startup accelerator. As far as I can tell, Sam has no experience manufacturing semiconductors. Sam Altman presented a grand vision for how TSMC could spend $7 trillion to make brand new factories to power his aspirations for AI. TSMC reportedly dismissed Sam as a “podcast bro.”

TD teams are people. Understanding how they interact with teams in different business models is critical to understanding how TD manages its responsibility to the company.

Intel’s TD Culture: Designed for Dominance, Not Flexibility

In the IDM model where companies design, manufacture, and market their own chips, the business groups (i.e. product marketing) define what success looks like—what markets are the future? What products will win those markets? How different do they have to be vs. today? Everyone has an opinion here, and the consequences of the investments are so important that frequently the CEO is the decision maker. When critics say they want an engineer as CEO of the company, it typically means one who can synthesize where analysis says the market is going with the right technology strategy to get there i.e. what do you really believe about the markets and what is the best way for TD to address it. Operating the fabs efficiently is critical, but they can only optimize what TD has defined.

One group has not been mentioned very much: the chip designers. In Intel’s case, it is a given they would make CPUs, the only question is for which markets. CPU designers can do novel things with their architecture, implementing new circuits, adding additional cache, and adding accelerators for new kinds of workloads. Through Andy Grove’s era at Intel, desktop PCs were the dominant computing format, and CPU design was about curation. In manufacturing lore, IBM was great at developing novel kinds of transistors and circuits but it created bloated manufacturing processes that could not do anything particularly well. That era of Intel succeeded by focusing on high-performance designs that TD could manufacture in the millions. Chip design had lots of room to explore but had many constraints by TD in what they could actually produce.

This dynamic is essential to understanding how TD works in an IDM: TD can tell a chip designer “no”. TD is responsible for ensuring their product can be scaled to the millions of units with the right schedule, quality and cost. TD can, and in some cases must, be rigid to ensure the company’s success. If TD delivers something in the product group’s target, it is the chip designer’s responsibility to build the right chip out of the Legos provided. If the chip designer believes they could do a better job with different Legos, it is not TD’s problem. In the most extreme case, TD can change the PDK if they develop a better way of doing things or simply learn something is not working. Changing the PDK would set back the chip design team by ~6 months, as the development flow restarts from physical design. What is the CPU design team going to do about it? If their bonuses are tied to shipping a design in a specific quarter, what can they do but ship as soon as it is ready? Same problem for the schedule. Everyone is looking at TD, asking when it will be ready. If they say “soon”, what recourse do you have? A chip designer cannot take their business elsewhere. If TD misses their schedule and continues to put other teams behind, it destroys team trust and culture.

Similarly, the time and pressure on TD to deliver means that they will take matters into their own hands. The IDMs predated the entire EDA market—they had to build their own EDA tools and process flows. As the EDA market grew around them and standardized designs and process flows, IDMs shrugged. They saw no benefit to changing their development process to something industry standard. When they need to develop libraries, they will only pay to develop and port libraries essential to their product group. If no product would use that cell, why spend money developing or porting it?

The Foundry Advantage: Why TSMC Outpaces Intel

The foundry model has a TD team but (usually) no Product Marketing or Design team to whom they answer. As a result, they have to anticipate what the market wants and work closely with many different customers to ensure they build the right process tech. Given the costs of new fabs, foundries seek out anchor clients: a chip design company that wants to work with the foundry to set TD’s requirements, ensure the process is optimized for themselves, and get the first access to the parts. Apple is famously TSMC’s anchor client.

The cost of being an anchor client is high in both engineering time investment and committing to buy wafers for technology that does not exist yet. While NVIDIA would love to be TSMC’s anchor client, they simply do not buy enough wafers today to do so. Would they want to be an anchor client somewhere else? It is plausible, though the alternative would have to offer comparable schedule, quality, and cost as TSMC.

Still, TSMC cannot get too enamored with their anchor client. It is important to service them first and also prepare every other customer to be successful. When TSMC began, they were not the best at anything, but they were pretty good and the only foundry in town. A fabless company had to work with them, and they had to work with fabless teams to get any revenue. If a design team showed up and asked if they could tweak a process, TSMC learned to say yes—assuming the customer will pay for it. As a result, TSMC’s TD culture is one of flexibility to customer requests. They work to say “yes”. They have to anticipate customer desires, especially in sensitive areas like changes to the PDK. As a result, TSMC developed a disciplined process of sharing PDKs with target ranges and clear guidelines about what might change and by how much. I am lacking good data, but my perception is that, at first, this was much slower than Intel’s model. However, it proved more robust over time, especially when Intel infamously struggled to get 10 nm to a production state circa 2014. At that point, TSMC had more wafer demand and a better TD team. They have never since trailed Intel in introducing the best process nodes.

By pioneering this model, though, TSMC enabled an ecosystem. In fact, the EDA vendors only really exist because TSMC enabled fabless chip design. Anyone trying to sell IP to a fabless chip designer, including the EDA vendors who maintain their own IP libraries, has to validate it in silicon, typically at their own expense. TSMC has all the best customers, so TSMC is their path to market. As a result, TSMC has the deepest, widest portfolio of tools and IP that costs TSMC nothing.

Lessons from IDM2.0’s Collapse

Pat Gelsinger left Intel this week after a spat with the board, as his strategy of IDM 2.0 went sour. IDM 2.0 was supposed to take the IDM model and add-on a foundry. They tried this before in 2015-2021, which I participated in, but Gelsinger put it as the centerpiece of his strategy. To be clear, I had no direct work with TD, I was extremely junior, and had only pleasant interactions with TD members. In truth, they were kind of my heroes. Yet, I heard the stories and saw the inherent conflicts in trying to ask TD to serve both an internal product group and the market. I see their hands all over the collapse.

To win a fabless chip designer’s business, the foundry has to answer some basic questions. The biggest question is if an IDM will try to compete with the customer. An IDM’s TD team cannot do anything but say they will not and honor it. If they ever give customers the perception that they are competing with them, the business line is over. The next question is how good is the node (again, usually defined by power, performance, area, and cost). The answer is usually “very good…if you design it the way we tell you.” Any foundry gives the same answer, but prospects will quickly find that the standard cells and IP that the IDM supports are only the ones they use. If the prospect requests something different, the answer can range anywhere from “no” to “you are stupid for wanting to do it that way” i.e. the same set of responses they might give an internal CPU designer but a far cry from what TSMC might offer. To accomodate customer requests, a foundry staffs multiple teams around the globe to address customer design questions at all hours. An IDM might staff one design team that works 8am - 5pm Pacific, because that is when their own design teams work. The final question is if the ecosystem supports the tools and processes they want to use. If they are not using the exact same thing (which they almost never are), the tools and IP would have to be ported to the new process. Someone has to pay for that, and (worse) be the first time someone tries to use it on a new node.

The incentive structure for TD teams inside an IDM is not conducive to customer service, a core muscle that TSMC had to develop to exist. With Gelsinger’s departure and Samsung’s own semiconductor manufacturing woes, the contradictions of the IDM model servicing outside companies seem obvious. If Intel’s board decides to spin off the foundry (subject to any restrictions by the federal government to receive CHIPs Act subsidies), the TD team will have another layer of the stack to master: pleasing chip designers. An entire generation of technical talent will have to learn they are not the first and last word in semis.

The story of Intel's IDM 2.0 failure underscores a fundamental lesson: aligning technical capabilities, organizational culture, and business strategies is essential in a rapidly evolving industry. Technology Development teams hold the keys to Moore’s Law, but without adapting to external market needs and fostering a customer-centric mindset, even the best-engineered solutions can falter. As the semiconductor landscape shifts, the industry must reckon with how to balance innovation, collaboration, and agility. Intel’s struggles serve as both a cautionary tale and a call to action for companies navigating the complex interplay between legacy systems and future aspirations.

Thanks to Julius Simonelli and lithos_graphien for reviewing drafts of this work. Any errors are my own.

This is a great introductory foray into the business model of chip companies!